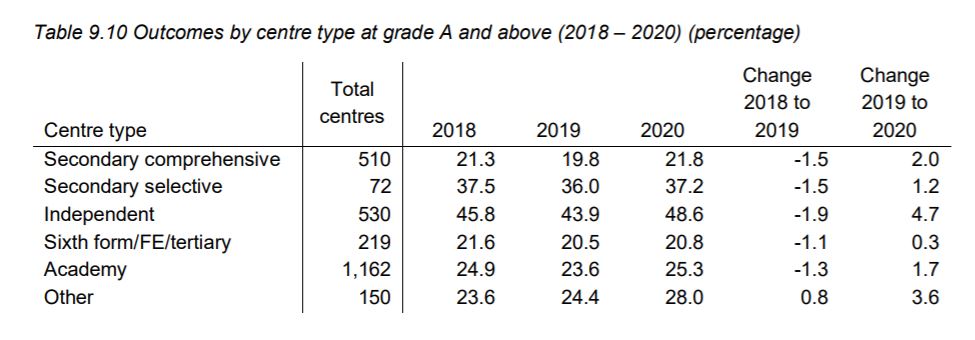

Private schools in England saw a greater increase in the proportion of students getting top A-level grades than other types of school compared to last year.

The percentage of pupils getting As and A*s in fee-paying institutions is up 4.7 points since 2019.

By contrast, comprehensives have seen a 2-point rise, and grammar schools are up 1.2 points. Sixth form and FE colleges saw the smallest increase – just 0.3 points.

So is this year’s exam system biased in favour of independent schools? Let’s take a look.

Moderation, moderation, moderation

With exams unable to happen as usual, regulators in every nation of the UK asked teachers to estimate what each pupil would have got under normal circumstances. They then “moderated” the results to make sure schools weren’t being too harsh or too generous.

That moderation proved controversial in Scotland after some students were marked down simply because their school had done badly in the past. On Tuesday, the Scottish government announced that anyone who had had their result downgraded would be awarded their (higher) teacher-estimated grade instead.

The English system is broadly similar to the one that sparked the Scottish row – teacher-estimated grades were moderated using a system that includes looking back at a school’s past performance.

Though they’re far from identical. And in the English case, it looks like the effect on deprived students isn’t nearly as pronounced as it was north of the border.

Were private schools more likely to be spared moderation?

Nevertheless, there has been some interest in data from the English regulator, Ofqual, that shows private schools saw a greater increase in the proportion of students getting As and A*s than other types of school this year.

We asked Ofqual whether this could be a result of its moderation system, also known as a “standardisation model”.

A spokesperson told FactCheck: “Our standardisation model, for which we have published full details, does not distinguish between different types of centres [schools], and therefore contains no bias, either in favour or against, a particular centre type.

“One of the factors for the increases in higher grades for some centres will be if they have smaller cohorts because teachers’ predictions are given more weight in these circumstances. Other factors will also have an effect, like potential changes in prior attainment profiles of students at Key Stage 2”.

We think that point about “smaller cohorts” is significant. Here’s why…

On average, teachers across all types of school were more generous to students than the grades produced by the regulator’s statistical model. So any student who’s exempted from moderation – or has its effects diluted – is likely to do better.

The Ofqual report says: “centres with small cohorts in a given subject either receive their CAGs or have greater weight placed on their CAGs than the statistical evidence”. CAGs are “centre estimated grades”, i.e. teacher predictions.

The thinking is that with very small numbers of current and/or past students, it’s hard to work out statistically-reliable patterns over time.

In practice, this means that if you’re one of a very small number of students taking a particular subject at your school, you’re likely to be awarded the more generous grade your teacher estimated for you (or something close to it).

It seems private schools are more likely to have these small cohorts.

Analysts from the education research company, FFL Datalab, estimate that independent schools enter 9.4 students for each A-level subject on average. For state-funded schools run by the local authority, the average is 11 pupils. For sixth form and further education colleges, it’s 33.

So it follows that private school students were more likely to get their teacher-estimated grades, or have more weight placed on them, than other types of students. (To be clear, small cohorts can exist at any type of school, but they seem to be more prevalent at fee-paying ones).

We should say that FFL Datalab’s school categories don’t quite match up with Ofqual’s.

Nevertheless, it’s worth noting that sixth form and FE colleges saw by far the smallest increase in top grades this year – just 0.3 percentage points. And by FFL Datalab’s estimates, they had the largest class sizes.

This supports the theory that small-cohort students benefited from Ofqual’s system by having more weight placed on teacher estimates – while pupils at bigger providers lost out because they were put through full statistical standardisation.

We asked Ofqual whether it had considered the fact that, by all-but exempting small-cohort centres from the full moderation process and instead matching or getting closer to teacher estimates, it might confer an advantage on independent schools.

A spokesperson told FactCheck:

“The arrangements we put in place this summer are the fairest possible to enable students to move on to further study or employment as planned.

“Centre assessment grades are the most reliable source of evidence for small cohorts and is the fairest approach available. While use of the model is the fairest possible way to calculate grades for larger cohorts, it would not have been feasible to apply it to very low entries which would include special schools, pupil referral units and tutorial centres and small subject cohorts within larger centres.”

Does this explain the difference between 2019 and 2020?

But even if it’s true that the structure of the system might theoretically advantage private school students over others, we still don’t know if that fully explains the fact that independent schools saw a bigger increase in top grades than other schools.

It could be that this crop of private school sixth-formers just happened to be especially good – after all, we see variation between years even in normal circumstances.

As it stands, we don’t think there’s data in the public domain that can tell us definitively how much of this disparity is down to Ofqual’s moderation process. We’ll update this article if we find any.

Could Ofqual have seen this coming?

In the spring – before schools had even submitted their estimates – Ofqual acknowledged that teachers generally tend to be optimistic when predicting their own students’ results. So it would have been reasonable to expect that giving more weight to teacher estimates for some students and not others would probably confer an advantage.

They would have also known that private school class sizes are smaller on average than those in state schools. (And that further education and sixth form colleges have the very largest groups).

So these two key bits of information were available to them at the time they decided to give students in small cohorts the benefit of teacher-estimated grades.

Given the extensive analysis they carried out in advance of creating this model (on gender, ethnicity, economic status, etc.), we think it would have been possible for Ofqual to anticipate the potential biases this decision might create in favour of private schools.

What was the alternative?

All that said, it remains true that it’s very difficult to draw reliable patterns from small class sizes.

Had Ofqual put small-cohort students through the full statistical standardisation model, they would have probably ended up giving them a disadvantage compared to other pupils. It would only take one or two outliers from a previous school year to skew the 2020 results for individuals.

And it’s not just independent school students who would have suffered. Those at schools with little or no historical data, special schools with small class sizes, or students taking rarefied subjects would have also lost out.

FactCheck verdict

Private schools in England saw a bigger increase in the proportion of students getting As and A*s than others compared to last year.

The English exams regulator Ofqual says its moderation system “contains no bias, either in favour or against” any particular type of school.

However, Ofqual decided that in schools with small numbers of students taking each subject, pupils would have more weight placed on their teacher-estimated grades – rather than going through the full “statistical standardisation” process.

Because we know that teachers were generous with their predictions overall, this meant that these “small cohort” students were likely to do better than others.

And we also know that fee-paying schools tend to have smaller cohorts than other types of school. This may fully or partly explain why they saw a bigger rise in top grades this year. And perhaps why further education and sixth form colleges, who have by far the largest average cohorts, saw the very smallest increase in high scores.

But the data we have is not definitive.

Either way, we think Ofqual might have foreseen the possibility that independent schools would reap the greatest rewards from this decision.

Nevertheless, there are sound statistical reasons for exempting tiny cohorts from the full moderation process. In fact, it’s not clear whether Ofqual could have taken a different approach without actually penalising students in small classes. That would have also affected pupils at special schools, new schools and those taking unusual subjects.